Pallet Verifier

- Team Name: David Semakula

- Payment Address: 16AExTwdZnzJ4UDM1MeQ3cNuetTtFz1yrpYTaVqCNJtmeppK (AssetHub USDT)

- Level: 2

Project Overview 📄

This application is a response to the RFP for Static Analysis for Runtime Pallets.

Overview

pallet-verifier is a tool for static analysis of FRAME runtime pallets that are used for building Substrate-based blockchains.

Background

FRAME pallets are modules that implement the business logic for the different use cases and features that you might want to include in a blockchain runtime. This enables developers to easily create application-specific Substrate-based blockchain runtime environments from a modular set of components.

Objective

pallet-verifier aims to detect common security vulnerabilities found in FRAME pallets using static program analysis techniques like data-flow analysis, abstract interpretation and symbolic execution.

Project Details

Introduction

This stage of the project will focus on detecting panics and arithmetic overflow/underflow (and lossy integer conversions) in dispatchable functions/extrinsics of FRAME pallets. However, other classes of security vulnerabilities (e.g. insufficient or missing origin checks, bad randomness, insufficient unsigned transaction validation e.t.c) will also be targeted in the future.

Architecture

FRAME is a Rust-based DSL (Domain Specific Language), therefore, the source code representation that pallet-verifier will analyze is Rust's MIR (Mid-level Intermediate Representation). This is because MIR is "a radically simplified form of Rust that is [ideal] for certain flow-sensitive [analysis]" (see also this and this).

As such, pallet-verifier will be built as a custom rust compiler (rustc) driver which will use MIRAI as a backend for abstract interpretation (and in the future, also as a tag and taint analysis engine).

Additionally, our custom rustc driver will be distributed as a custom cargo sub-command

(e.g. cargo verify-pallet) to allow for a seamless and familiar developer experience.

MIRAI Integration

At this stage of the project, we'll use MIRAI to detect panics and arithmetic overflow/underflow (and lossy integer conversions). However, as noted before, MIRAI also includes a tag and taint analysis framework that can be used for applicable analyses in the future.

Panics

Finding potential panics (abrupt terminations) is one of MIRAI's primary use cases. MIRAI does this by performing a path-sensitive analysis. This means that given an entry point, MIRAI "will analyze all possible code paths that start from that entry point and determine if any of them can reach a program point where an abrupt runtime termination will happen". Notably, "[this] use case generally requires no annotations".

Arithmetic overflow/underflow (and lossy integer conversions)

MIRAI can detect potential arithmetic overflow/underflow due to arithmetic operations,

if its diagnostic level is set to library or paranoid (i.e. --diag=library|paranoid),

and either the rustc "overflow-checks" (i.e. -C overflow-checks=true|on|yes|y) or

"debug-assertions" (i.e. -C debug-assertions=true|on|yes|y) flag is enabled.

This use case also generally requires no annotations, because when the aforementioned rustc flags are enabled,

rustc automatically adds instructions for dynamic overflow checking, which MIRAI can use

to detect potential arithmetic overflow/underflow.

However, there's one exception, because dynamic overflow checks are not added

(see also) for as conversions (i.e. type cast operations) e.g.

fn convert_with_overflow(val: u16) -> u8 {

val as u8

}

Therefore, MIRAI cannot automatically detect arithmetic overflow/underflow (and lossy conversions)

due to explicit as conversions, even when the aforementioned MIRAI options and rustc flags are enabled.

As such, pallet-verifier will need to automatically add annotations that allow MIRAI to verify that all reachable

as conversions are safe/lossless (or emit a warning otherwise).

Adding MIRAI annotations automatically

At this stage of the project, pallet-verifier will only add annotations for verifying that integer as conversions

don't overflow/underflow nor lose precision.

The first step of this process is finding all integer as conversions that are either narrowing or lossy conversions

(i.e. the range of the source type is not a subset of that of the final type e.g. an as conversion from u16 to u8

or u8 to i8). This will be done by implementing a MIR visitor that finds Rvalues of

the Rvalue::Cast(CastKind, Operand<'tcx>, Ty<'tcx>) variant, and cast kind of

CastKind::IntToInt, and comparing the source type to the final type.

Then, for the identified narrowing and lossy conversions, pallet-verifier will add MIRAI verify!

annotations with predicates that bound the operand within the range of the final type of the cast expression

(e.g. for a u16 to u8 conversion, the predicate would be val <= u8::MAX where val represents

the operand of the type cast operation).

Adding MIRAI annotations to MIR

pallet-verifier will add MIRAI annotations by overriding rustc's

optimized_mir query (see also this and this).

This is notably "the only query that can fetch non-local MIR" which will allow us to

detect potentially lossy and overflowing/underflowing as conversions introduced by calls to third-party code/ dependencies.

It's also notably the rustc query that MIRAI uses to get the MIR that it interprets (see here and

here) for similar reasons.

However, this presents a challenge because MIRAI annotations are ordinarily exposed as Rust declarative macros

(e.g. verify!), but macro expansion has already been completed (i.e. during lexing and parsing)

by this stage of the compilation pipeline (i.e. code generation).

So pallet-verifier will need to add MIRAI annotations using their equivalent MIR instructions

as opposed to the typical declarative macros.

Fortunately, MIRAI's declarative macros expand to relatively straight-forward calls to simple functions with

noop bodies, that MIRAI treats as special intrinsic/built-in instructions during abstract interpretation

(see this, this and this).

For example, the verify! macro expands to a call to the

mirai_verify function which is defined as follows in the MIRAI annotations crate:

pub fn mirai_verify(_condition: bool, _message: &str) {}

This makes it relatively straight-forward to retrieve the DefId of the target function

(e.g. mirai_verify) and use it to construct MIR for calling the function,

thus adding a MIR instruction equivalent to using the corresponding declarative macro (e.g. verify!).

Adding the MIRAI-annotations crate as a dependency

It's improbable that any of the FRAME pallets that pallet-verifier will be invoked on will include the

mirai-annotations crate as a dependency, but we'll need it included as a dependency to allow us

(and MIRAI) to use/access DefIds (and related definition information) when

adding (and interpreting) annotations as described in the previous section.

The process for "silently" adding the mirai-annotations crate (i.e. without modifying target FRAME pallet crates) will actually be relatively straight-forward, because:

- the mirai-annotations crate is essentially just a single relatively small

lib.rsfile with no dependencies - pallet-verifier will be built as a custom rustc driver and cargo subcommand that

will also set itself as the

RUSTC_WRAPPERwhen it's invoked by cargo.

The process, in more detail, will work as follows:

- first we'll compile the

lib.rsfile (or just its relevant content) as a library by invokingrustcdirectly. - then, because pallet-verifier will be the

RUSTC_WRAPPERwhen it's invoked, we can simply add an additional--extern mirai_annotations=/path/to/compiled-artifact.rlibargument to the arguments received from cargo when invoking rustc on the target FRAME pallet.

Identifying (and generating) tractable entry points

The generics challenge

FRAME is inherently a generic framework because it makes extensive use of Rust generic types and traits,

most notably for defining the configuration and "dependencies" of pallets using a Config trait and

the #[pallet::config] attribute macro.

Additionally, generic types are used extensively when defining other entities, including in the Origin parameter for

dispatchable functions/extrinsics, which is typically declared as a generic associated type

origin: OriginFor<T>.

This presents a challenge when performing analysis with MIRAI, because "it is not possible for a generic or higher order function to serve as an entry point". This is because "it is necessary for MIRAI to resolve and analyze all functions that can be reached from an entry point".

Too many macro-generated entry points

Another challenge is the fact that when MIRAI is invoked with its default configuration using cargo mirai,

it will attempt to analyze all entry points

(i.e. "[all] function[s] that can be called externally [... e.g.] public function[s] of a library crate").

In the context of a FRAME pallet, this essentially means MIRAI with attempt to analyze all functions with

pub visibility. This ends up being a significant challenge for efficiency, and a cause of timeouts,

because even a relatively simple example pallet definition like the one used by the

Supercomputing Systems AG (SCS) team in the

previously completed research grant yields about ~300 entry points (i.e. public functions)

after macro expansion (you can inspect the list of entry points by running

cargo clean && MIRAI_FLAGS="--print_function_names" cargo mirai).

The TestExternalities and SubstrateHostFunctions challenge

A typical solution to the above two challenges is to analyze using only unit tests as entry points

by running cargo mirai --tests. This is because unit tests provide concrete types for

all generic types and traits, and will typically be a manageable number for a single crate.

This seems like a viable solution for our use case, since it's a standard best-practice for Substrate/FRAME developers

to write unit tests for all dispatchable functions/extrinsics.

However, here too we run into some challenges, presumably because the SubstrateHostFunctions

and TestExternalities implementation needed to run unit tests

introduces too much complexity in the form foreign functions for which MIRAI can't access MIR,

combined with some complex indirection, and so the analysis either aborts or timeouts.

Another efficiency concern for this approach is the fact that it's quite common for a unit test to exercise a single target function multiple times, or even for multiple unit tests to be written for a single function. However, while this is perfectly reasonable for unit testing, it's quite wasteful in an entry point for abstract interpretation.

Finding tractable entry points

Since what prevents us from directly using dispatchable function/extrinsic definitions as

entry points is the fact that they're generic, while unit tests provide concrete implementations

of the required generic types and traits, but introduce too much complexity presumably because of

foreign functions and complex indirection related to

SubstrateHostFunctions and TestExternalities implementations.

A hybrid solution that uses the concrete types and arguments from unit tests to "specialize" direct calls to the

dispatchable functions/extrinsics (i.e. bypassing calls to

sp_io::TestExternalities::new(..).execute_with(|| { .. }),

frame_system::GenesisConfig::default().build_storage() e.t.c) seems viable.

In fact, the viability of such a hybrid solution has already been successfully demonstrated in the previously completed research grant delivery in which such a "hybrid" entry point was manually implemented, and configuring MIRAI to only analyze this entry point eliminated the timeouts and aborts that were otherwise experienced when MIRAI attempted to analyze all entry points as detailed here.

However, manually implementing these "hybrid" entry points is not scalable, so pallet-verifier will need to generate them automatically.

Generating tractable entry points automatically

The first step of this process is finding a function call for each dispatchable function/extrinsic

(i.e. presumably via "specialized" calls of dispatchable functions in unit tests).

This will be done by retrieving the DefIds for all dispatchable functions,

and then implementing a MIR visitor that finds Terminators of the

TerminatorKind::Call { func, args, .. } variant whose func operand matches/wraps

the DefId of a dispatchable function.

Then, the concrete types and argument values used in the discovered "specialized" dispatchable function calls will be used to construct/generate tractable entry points (i.e. definitions of minimal public functions that make dispatchable calls similar to this "hybrid" entry point from the previous research deliverable).

Note that, at this point we can emit a warning for any dispatchable functions for which no "specialized" call could be found (i.e. presumably because no unit tests are defined for it), or even abort with an error message, if no "specialized" calls are found for any of the dispatchable functions.

Switching our focus back to the generated tractable entry points, one challenge here is that we'll need DefIds

for these generated tractable entry points (inorder to pass them to MIRAI for analysis), but DefIds are created

during HIR (High-Level Intermediate Representation) lowering (see also).

Fortunately, rustc's query-based and demand-driven compilation model means it has excellent support for incremental compilation/analysis (see also), this allows us to (strategically) add our generated entry points to the target FRAME pallet's "virtual" source code without needing to recompile any unrelated code.

Lastly, while adding our generated entry points, we'd prefer not to edit the target FRAME pallet's

"actual" source code (i.e. no changes on disk), so our custom rustc driver will use rustc's

FileLoader API to provide a custom FileLoader that feeds

our generated entry points directly into the compiler (i.e. without modifying the FRAME pallet's

"actual" source code on disk - hence the "virtual" phrasing in the last paragraph).

Using MIRAI as a library

At this point, we've already detailed how we'll use rustc as a library via our custom rustc driver, in order to customize compilation and analysis to our target domain (i.e. analyzing FRAME pallets). Similarly, a few considerations mean we'll need to use MIRAI as a library as well, to give us more flexibility in resolving a few domain-specific challenges related to analyzing FRAME pallets.

It was noted in the previously completed research grant delivery that the diagnostic level

had to be set to paranoid (i.e. --diag=paranoid) for MIRAI to emit some expected warnings.

However, the paranoid diagnostic level produces a lot of warnings (by design),

since it's maximally strict and so warns about "any issue that may be an error", by essentially disabling

some of MIRAI's "normal" mechanisms for reducing false positives, like

"suppressing any diagnostics that arise within the standard library or third party code

[... i.e. diagnostics that are [not] tied to source locations within the [current] crate]".

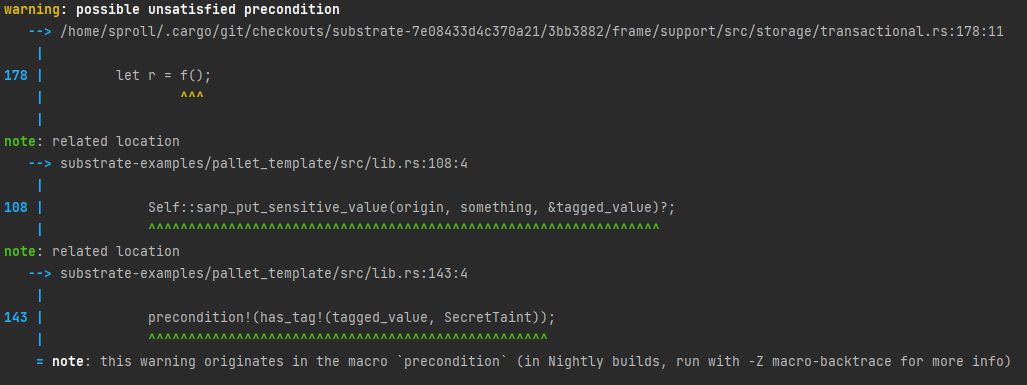

However, by examining the reported diagnostic from the previous research delivery (see image below), we can make a few important observations:

- The primary span/location for the diagnostic is pointing to the

frame_supportpallet, and specifically theframe_support::storage::with_storage_layerfunction, which explains why this diagnostic is only emitted at theparanoidlevel (i.e. it's arising from a third-party library as far as MIRAI is concerned). - There are still some sub-diagnostics relating the primary diagnostic to the current crate

(i.e. the

note: related location ...lines).

Armed with this information, we can use cargo expand to have a look at the final code (i.e. after macro expansion)

for the target dispatchable function (i.e. the do_something function) below:

impl<T: Config> Pallet<T> {

/// ...

pub fn do_something(origin: OriginFor<T>, something: u32) -> DispatchResult {

frame_support::storage::with_storage_layer(|| {

let who = ensure_signed(origin.clone())?;

Self::sarp_put_sensitive_value(&origin, something)?;

Self::deposit_event(Event::SomethingStored {

something,

who,

});

Ok(())

})

}

/// ..

}

Notice that FRAME wraps the user written body of the dispatchable function, with a call to

frame_support::storage::with_storage_layer, and presumably the indirection

introduced by this closure-based invocation, combined with the complexity introduced by the way

procedural macros report call-site information leads to MIRAI reporting the

closure call inside the frame_support::storage::with_storage_layer function as the

primary source location for the diagnostic.

Presumably, we can find ways of "fixing" this in MIRAI, while taking care not to introduce false positives in other adjacent cases, but such a generic fix will likely be non-trivial. Nevertheless, such efforts will be pursued, but not as part of the scope of this grant application.

However, an alternative approach is to use our domain-specific knowledge to determine which diagnostics to either "suppress" or "promote", because, being a domain-specific tool, pallet-verifier will always "know" more about the analysis context than MIRAI. Therefore, our approach will be to integrate with MIRAI as a library. This will allow us to access MIRAI's diagnostics buffer directly, and use our own custom filters to determine which diagnostics to emit based on: the reported primary location, reported related locations, and taking into account the behavior of FRAME macro generated code.

Another benefit of this approach is it can lead to some efficiency gains by bypassing some redundant work in MIRAI

(e.g. iterating over all body owners to find the DefId of the target function,

when pallet-verifier already knows the relevant DefId and so can just call

the analyze_body function directly e.t.c).

Lastly, another lever for reducing false positives (i.e. irrelevant warnings) related to incomplete analysis due to missing MIR bodies for "foreign functions" (e.g. standard library functions that aren't inlined, and system calls), is to add "summaries/foreign contracts" for these "foreign functions", thus making the analysis more precise. While this part of MIRAI is not that well documented, it's not that hard to figure out how to add these "summaries/foreign contracts" from the existing examples and related utilities, which actually show that these "summaries/foreign contracts" can be defined outside MIRAI, which certainly gives us more flexibility without having to modify MIRAI itself.

Benchmarking performance and accuracy

This stage of the project will create a simple benchmark to showcase the performance and accuracy metrics of pallet-verifier on a few production pallets (e.g. 3-5 pallets from this list). For each pallet in the benchmark suite, we'll add bad variants of dispatchable functions by replacing proper error handling and safe arithmetic constructs with panicking and overflow/underflow-ing equivalents, while also keeping the good variants unedited. The benchmarks will then report execution time and ratio of true vs false positives for each dispatchable function, as well as aggregates for these metrics for the target pallets and the entire benchmark suite.

In general, we'll be motivated to reduce the amount of false positives (if any), and that could be viewed as "gaming the benchmark". However, for our use case, this will be desirable behavior as long as the fixes applied to remove/reduce the false positives are generic in nature (i.e. the fixes are applicable to any pallet, and don't just simply detect the presence of a specific pallet and adjust the behavior of the tool based on this knowledge).

Other considerations

Finally, an important consideration to keep in mind is that MIRAI "is intended to become a widely used static analysis tool for Rust", and in the wider Rust ecosystem, it's quite common (and sometimes even reasonable) for functions to panic in cases when they're called with "problematic" arguments.

Because of this, MIRAI makes a lot of effort to try to

suppress warnings that would be perceived as false positives in the context of the wider Rust ecosystem.

However, some of these warnings are legitimate bugs in the context of a blockchain runtime.

A notable example of this is MIRAI's precondition inference which has the effect of

suppressing warnings about possible panics if the panic is conditional on a parameter of the entry point e.g.

MIRAI will not report a panic from the code snippet below, even at the paranoid diagnostic level,

if it's analyzed as an entry point.

pub fn hates_zeros(val: u8) {

if val == 0 {

panic!("I hate zeros!");

}

}

Note however, that this is only problematic for our use case if it happens in an entry point.

This is because when hates_zeros is not an entry point, the inferred precondition (i.e. verify!(val != 0)),

will need to be satisfied by the function's callers.

This particular behavior ends up not being an issue for our proposed architecture, because our entry points will be simple and artificially constructed with no parameters and no branching.

Technology Stack

Rust - will be used for implementing all components of pallet-verifier.

Ecosystem Fit

FRAME pallets are an essential building block for composing Substrate-based blockchain runtimes, therefore, as rightfully identified by the RFP for Static Analysis for Runtime Pallets, tools like pallet-verifier that integrate directly into developer workflows, and detect and warn developers about potential security vulnerabilities in pallets are essential for improving security in the Polkadot/Substrate/Kusama ecosystem.

Team 👥

Team members

- David Semakula davidsemakula

Contact

- Contact Name: David Semakula

- Contact Email: hello@davidsemakula.com

- Website: https://davidsemakula.com

Legal Structure

I'll be working as an individual.

- Registered Address: N/A

- Registered Legal Entity: N/A

Team's experience

I'm an independent Software Engineer & Systems Architect with over 10 years of experience.

I'm the creator of ink! analyzer (a collection of modular and reusable libraries and tools for semantic analysis of ink! smart contracts). Notably, like FRAME, ink! is also a Rust DSL. I also independently contribute to rust-analyzer (a Rust compiler front-end for IDEs).

I've previously worked as a technical lead on projects for HubSpot, Permobil, Pressboard, Grindery, InboundLabs, Tunga, ButterflyWorks, Telegraaf Media Groep (TMG) and many more companies.

I hold a Bachelor of Science in Computer Science degree from Makerere University, Kampala, Uganda. You can find my full profile at https://davidsemakula.com.

ink! analyzer was previously funded by two Web3 Foundation grants (see this and this).

Team Code Repos

- https://github.com/davidsemakula

- https://github.com/ink-analyzer/ink-analyzer

- https://github.com/ink-analyzer/ink-vscode

- https://github.com/wamutech/wamu-rs

- https://github.com/wamutech/wamu-spec

- https://github.com/davidsemakula/substrate-vault

- https://github.com/mukutu-tech/mukutu-safe

Team LinkedIn Profiles (if available)

- https://davidsemakula.com (I use my own website for my profile)

Development Status 📖

A significant amount of research of internals of the related tools (i.e. rustc and MIRAI), as well as a review of the previously completed research delivery by Supercomputing Systems AG (SCS), has been done to inform the proposed architecture of pallet-verifier, as described in detail in the Project Details section above. Beyond this, no meaningful development has started for this project.

Development Roadmap 🔩

Overview

- Total Estimated Duration: ~4 months

- Full-Time Equivalent (FTE): 1

- Total Costs: 30,000 USD

Milestone 1 — rustc driver, cargo subcommand, basic MIRAI integration, and automatic tractable entry point generation

- Estimated duration: ~7-8 weeks

- FTE: 1

- Costs: 15,000 USD

| Number | Deliverable | Specification |

|---|---|---|

| 0a. | License | MIT or Apache 2.0 |

| 0b. | Documentation | I will provide detailed source documentation including rustdoc documentation, a document describing the design and high-level architecture of pallet-verifier (i.e. similar to the project details section of this document), and a README file providing general information about the tool, installation and usage instructions, and links to other documentation and resources related to the tool. |

| 0c. | Testing and Testing Guide | Core functions will be fully covered by comprehensive UI tests to ensure functionality and robustness. Additionally, a benchmark suite that showcases the performance and accuracy metrics of the tool on 3-5 production pallets will be implemented. In the guide, I will describe how to run these tests and benchmarks. |

| 0d. | Docker | N/A |

| 1. | Rust binary crate | I will create a Rust binary crate that implements a custom rustc driver, custom cargo subcommand, basic MIRAI integration (i.e. excluding automatic annotation capabilities), and automatic tractable entry point generation which will enable invoking pallet-verifier as a cargo subcommand (e.g. cargo verify-pallet) on FRAME pallets to detect potential panics and arithmetic overflow/underflow due to arithmetic operations (i.e. excluding as conversions/ type cast operations). |

Milestone 2 — MIR annotation capabilities and detecting arithmetic overflow/underflow (and lossy integer conversions) due to as conversions

- Estimated duration: ~7-8 weeks

- FTE: 1

- Costs: 15,000 USD

| Number | Deliverable | Specification |

|---|---|---|

| 0a. | License | MIT or Apache 2.0 |

| 0b. | Documentation | I will provide detailed source documentation including rustdoc documentation, a document describing the design and high-level architecture of pallet-verifier (i.e. similar to the project details section of this document), and a README file providing general information about the tool, installation and usage instructions, and links to other documentation and resources related to the tool. |

| 0c. | Testing and Testing Guide | Core functions will be fully covered by comprehensive UI tests to ensure functionality and robustness. Additionally, a benchmark suite that showcases the performance and accuracy metrics of the tool on 3-5 production pallets will be implemented. In the guide, I will describe how to run these tests and benchmarks. |

| 0d. | Docker | N/A |

| 0e. | Article | I will publish an article that explains how pallet-verifier works, the security vulnerabilities it can detect, and how to use it to analyze FRAME pallets. |

| 1. | Rust binary crate update | I will update the Rust binary that implements pallet-verifier to support adding the mirai-annotations crate as a dependency to FRAME pallets without editing Cargo.toml, adding MIRAI annotations to MIR, and more specifically to support automatically adding annotations for verifying that integer as conversions don't overflow/underflow nor lose precision. |

Future Plans

In the short term, I will likely apply for follow-up funding for:

- improving or widening the scope of existing analyses e.g. detecting panics in pallet/runtime hooks.

- adding analyses for more vulnerability classes and insecure patterns (e.g. insufficient or missing origin checks, bad randomness, insufficient unsigned transaction validation e.t.c).

- adding support for using other specialized static analysis tools as backends for specific analyses (e.g. symbolic execution engines).

Additionally, an often noted disadvantage of building Rust static analysis tools based on MIR (and indeed plugging into the rust compiler in general), is the fact that rustc's APIs for MIR (or more generally rustc's internal APIs) are unstable. There's an ongoing effort to address some of these issues in the form of the official Stable MIR Librarification Project, however, this project is still relatively early, and at the moment, also still unstable (i.e. pre-1.0). Nevertheless, migrating pallet-verifier (and related tools like MIRAI) to the stable MIR API, whenever it's ready, should be considered a long-term goal for the project.

Another related area of interest would be either extending the scope of the tool, or creating a similar tool, to analyze ink! smart contracts using similar static analysis tools and techniques.

Finally, as the project is a public good, ultimately, the long term goal is to create a community of users, contributors and ecosystem partners who are invested in the project's long-term success because of its utility.

Referral Program (optional) 💰

N/A

Additional Information ➕

How did you hear about the Grants Program?

Web3 Foundation Website.

I have also previously successfully applied for and completed two Web3 Foundation grants (see this and this) for ink! analyzer (a collection of modular and reusable libraries and tools for semantic analysis of ink! smart contracts).